ยินดีต้อนรับทุกท่าน เข้าสู่ เว็บแทงหวยชั้นนำอันดับ1 ที่ดีที่สุดในประเทศไทย 2020 !

999lucky เว็บแทงหวยอออนไลน์อินเตอร์ โดยเรามีบริการ แทงหวยออนไลน์ มีระบบ ฝาก-ถอน รวดเร็วทันใจ เว็บแทงหวย เปิดให้บริการนานกว่า 10 ปี จึงมั่นใจได้ถึงความปลอดภัย และมีมาตรฐาน การบริการลูกค้า ที่เป็นเลิศ มั่นคงเรื่องการเงิน 100%

ยินดีต้อนรับทุกท่าน เข้าสู่ เว็บหวยออนไลน์ชั้นนำอันดับ1 มาเเรงที่สุดในไทย 2020 เว็บแทงหวย 999lucky นับได้ว่าเป็น เว็บแทงหวย ที่มีผู้คนให้ความสนใจและนิยมเล่นกันมากในตอนนี้ เนื่องทางเว็บมีการบริการที่ สะดวกสบาย ง่ายต่อการแทงของลุกค้า !!

999lucky เว็บหวยจ่ายเยอะ เรายังเปิดบริการให้ท่านได้เข้า แทงหวย หวยรัฐบาล หวยฮานอย หวยหุ้นไทย หวยจับยี่กี หวยหุ้นต่างประเทศ หวยเวียดนาม หวยลาว หวยปิงปอง huaydee ตลอด 24 ชั่วโมง !!

สมัครสมาชิกเพียง1นาที ที่สำคัญเรายังมีทีมงานแอดมิน ไว้คอยไว้บริการลูกค้า คอยให้คำปรึกษา ตอบคำถามท่านเสมือน ลูกค้า VIP ตลอด 24 ชั่วโมง อีกด้วย ครับ !!

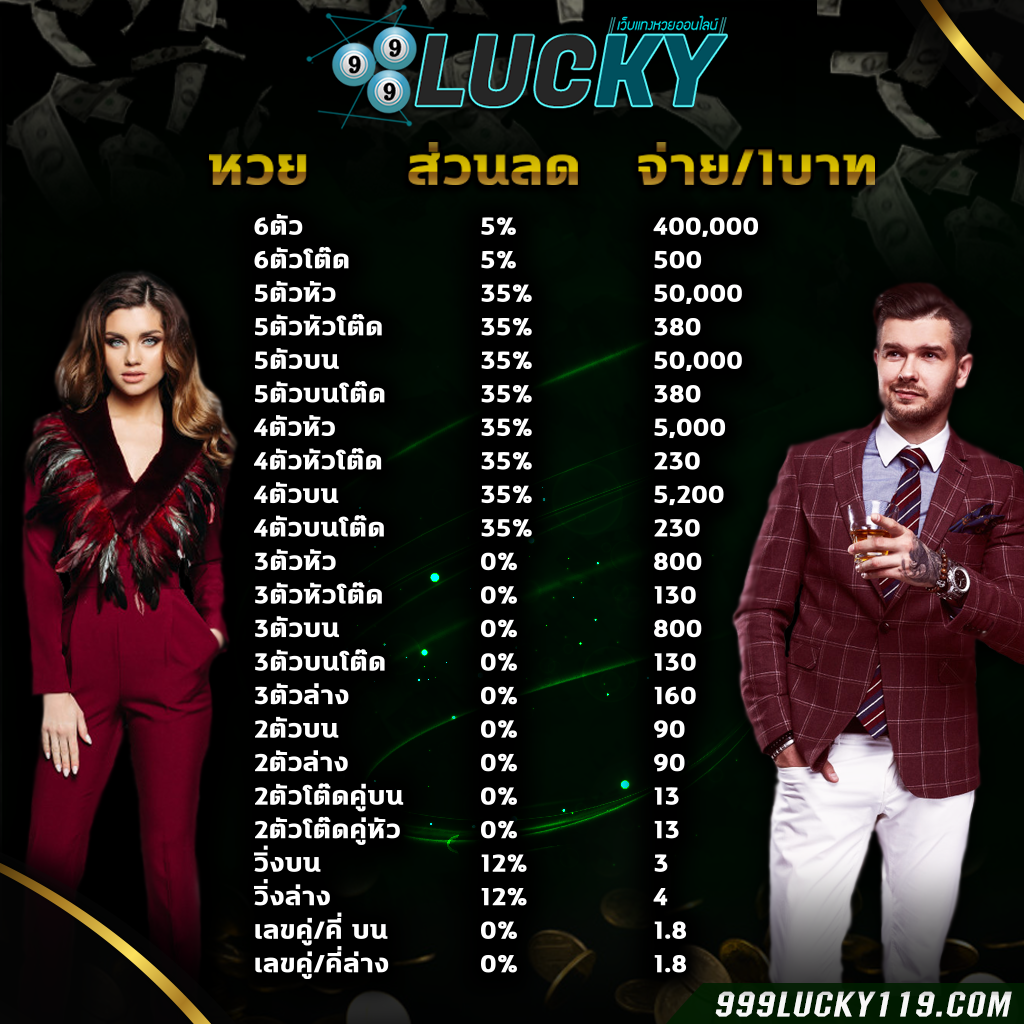

999LUCKY เว็บชั้นนำอันดับ1 ที่มี อัตราการจ่ายแพง ที่สุดในประเทศ 2020

999lucky เว็บหวยออนไลน์ ชั้นนำอัดดับ1 ในเมืองไทย ที่มีผู้คนและ คอหวย ทั้งหลายให้ความนิยมเป็นอย่างมาก ในขณะนี้ เนื่องจากเว็บมีระบบที่ทันสมัย สะดวก สบายง่ายต่อการใช้งานของลูกค้า และยังมีทีมงานคุณภาพมืออาชีพ ไว้คอยดูเเลตอบทุกคำถามทุกปัญหาลูกค้าอยู่ตลอด 24 ชั่วโมงอีกด้วยครับ สมัครสมาชิกฟรี !!

แถมทางเว็บเรายังมี กิจกรรมโปรโมชั่น มากมายเพื่อให้สมาชิกได้ เพลิด เพลิน กับทางเราอีกด้วย ท่านใดที่ยังมองหา เว็บหวยจ่ายเยอะ เว็บหวยชั้นนำ อยู่ท่านไม่ควรพลาด เว็บดีๆอย่าง 999lucky ไปนะครับ >>ติดต่อเรา<<

ข้อดีของหวยออนไลน์ 999lucky

- ง่ายต่อการซื้อ เเละ แถมยังสะดวกสบาย

- เลขดังซื้อได้ไม่อั้น เเละ ยังสามารถซื้อได้ถึง 15.30

- หากถูกราลวัลระบบจะตัดเงินให้ทันที

- มีอัตราการจ่ายเยอะกว่า เว็บ อื่นๆ

- สำคัญ จ่ายจริง จ่ายไว ไม่โกงมั่นคงปลอดภัย 100%

สำหรับทุกท่านที่กำลังมองหา เว็บแทงหวยออนไลน์ ที่ จ่ายจริง ไม่โกง เเละ ยังมี อัตราจ่ายแพงที่สุดในตอนนี้ เเละ มีคนไทยนิยม เล่นมากที่สุด 999lucky จึงเป็นอีก 1 เว็บแทงหวยอีกนึงที่คนไทยหลายๆคน ให้ความไว้วางใจ มากที่สุด !

999LUCKY เว็บแทงหวยออนไลน์ชั้นนำอันดับ 1 ที่มาแรงที่สุด 2020

มั่นคง ปลอดภัย จ่ายจริง ไม่โกง ระบบ ฝาก-ถอน รวดเร็วทันใจ ต้องที่นี่ที่เดียวเท่านั้น

หวยรัฐบาล | หวยยี่กี | หวยฮานอย | หวยมาเลย์ | หวยออนไลน์ | หวยหุ้นไทย